Experimenting with the Model Context Protocol (MCP)

Separating concerns in a composable way through a contextual layer.

Following my first blogpost on the Artificial Intelligence problem space and the impact of continuous advancements in the software development lifecycle, one technology that excites me is the Model Context Protocol (MCP) from Anthropic. It reminds me of the Language Server Protocol (LSP) used in Integrated Development Environments (IDEs).

Conceptually, MCP is straightforward—it brings the popular client-server architecture into the Language Model (LLM) space. There are many use cases for automation. As engineers we spend a significant amount of time calling different systems to fetch information; monitoring platforms, source code and Machine Learning model hosting services, issue trackers, datastores, internal APIs. Imagine using natural language as an abstraction instead of dealing with the intricacies of all these systems.

This is already possible through function calling or tool use. What MCP brings to the table is that it reduces a O(M*N) problem to O(M+N), where M is the number of clients and N the number of tools (also see Sourcegraph’s blogpost). It does this by separating concerns in a composable way through a contextual layer.

There is already a growing list of available MCP servers. Companies such as Grafana are building their own servers while others, like Stripe, are building flexible toolkits that support MCP.

On the client side, Claude Desktop is the default platform. However, there is already a list of others including IDEs like Cursor, and even Command Line Interfaces (CLIs) that enable communicating with MCP servers using different models for privacy or other reasons.

The Anthropic team is also building tools to facilitate debugging. An example is the MCP Inspector which is much needed to create an end-to-end experience.

I experimented with a set of open source and custom servers and the results, even with light-weight models running locally, have been decent.

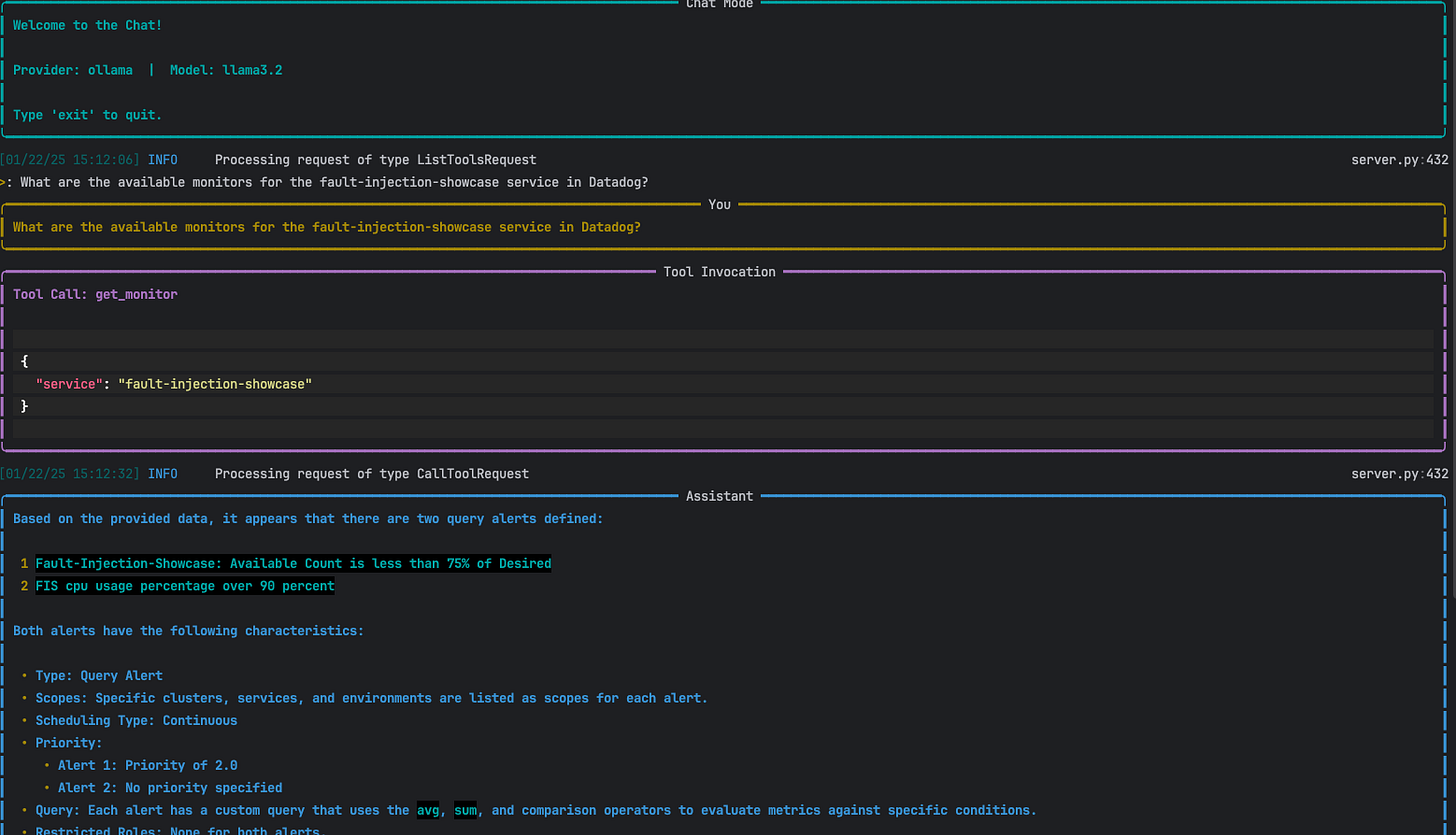

Below you can see a toy example using the mcp-cli, a small server I built for DataDog, and Llama 3.2 running locally with Ollama. This runs using a single command:

uv run mcp-cli --server datadog --provider ollama --model llama3.2Looking forward to more standardisation in this space!